The buzz around ChatGPT is undeniable. But many conversational AI vendors are telling the same story – ChatGPT is unsuitable for enterprise use cases.

Large Language Models (LLMs) enable conversational assistants to understand intent behind utterances, even when they haven’t seen those exact utterances before. Although powerful at language generation, ChatGPT only achieves 88% accuracy in our internal challenging benchmarks against 92% with the PolyAI’s ConveRT model. Without additional training data, ChatGPT would only correctly understand the intent behind 88 utterances out of 100.

That doesn’t mean PolyAI can’t benefit from ChatGPT. We’ve uncovered some spaces where we can utilize the technology to improve customer experience with conversational assistants.

Before we show you, let’s do a quick primer on LLMs and why other conversational AI vendors are skeptical.

Large Language Models for enterprise conversational AI

Large Language Models are a relatively recent breakthrough in conversational AI. LLMs are trained on vast datasets – millions or billions of text samples typically scraped from the internet (think social media sites, product descriptions, movie and television scripts, news articles, FAQs etc). As a result of these vast training datasets, LLMs can often accurately understand the meaning behind words and sentences without having necessarily been exposed to those examples previously.

At PolyAI, we’ve created our own proprietary LLM called ConveRT. The ConveRT model has been trained on millions of conversational samples, making it around 10% more accurate in low-data settings than models from Google Dialogflow, IBM Watson, and Rasa.

Many conversational AI vendors use LLMs, some proprietary and some licensed from other providers. So why is ChatGPT different?

PolyAI’s ConveRT is a retrieval model. This means it can understand the intent behind an input utterance and select the most appropriate response from a pool of responses.

ChatGPT is a generative model. It can understand intent behind an input utterance and generate responses from scratch based on the data it has been trained on.

Retrieval models are more suitable for customer service use cases because they can’t go off-script.

Generative models create responses from scratch, so enterprises would have little control over what they say should they choose a generative approach for customer service automation. This can make it difficult for enterprises to maintain control over business context, policies, and compliance. And while OpenAI has worked to enable ChatGPT to identify toxic language, many have identified unacceptable biases that would be the death of any respectable brand.

But that doesn’t mean enterprises can’t benefit from ChatGPT. Far from it…

How will enterprises benefit from ChatGPT?

The most practical customer-facing applications of ChatGPT will use the technology to fine-tune the performance of conversational assistants. Here are a few examples of how we’re implementing ChatGPT at PolyAI.

Using ChatGPT for assistant simulation

We’re now using ChatGPT to simulate a real-world testing environment for our conversational assistants.

We engineer prompts for ChatGPT that trigger it to create similar utterances to those we would expect from customers. For example, with a logistics company, we might use a prompt like, “Imagine you ordered a package, but it never arrived. Get on the phone with a contact center agent and find out what happened.”

By having ChatGPT role play as customers, we’re able to launch voice assistants with higher levels of accuracy than ever before.

To do this, we’re using adversarial training, similar to the approach that DeepMind used to create AlphaGo – the first computer program to defeat a professional human Go player. They did this by creating an initial prototype which they then pitted against different versions of itself repeatedly so that each time it would learn from its mistakes.

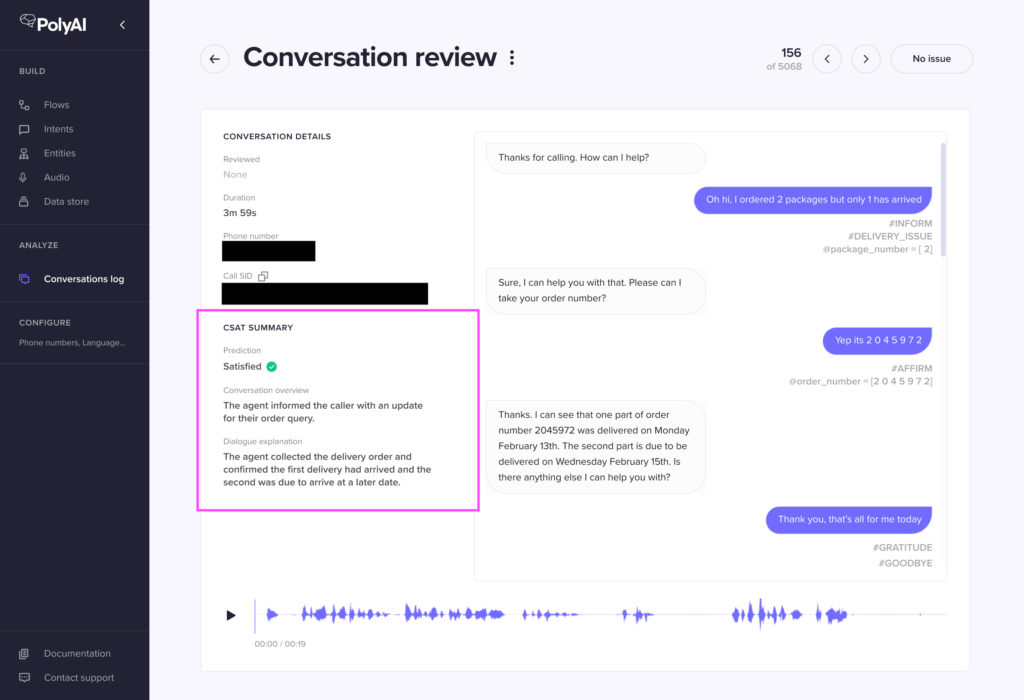

CSAT predictions

PolyAI is launching a new module that predicts customer satisfaction for every call. This will give our clients a real-time view of customer satisfaction without requiring callers to sit through lengthy surveys.

We’re using ChatGPT to summarize conversations into short one-liners that our dialogue design team can use to rapidly identify areas for optimization, meaning we can make improvements faster than ever, with very little labor.

The future of ChatGPT for conversational assistants

It’s unlikely that enterprises will want to put their customer service entirely in the hands of generative models. In the same way call centers follow scripts, conversational assistants for customer service should always have an on-brand pre-approved bank of responses.

That said, enterprises should take advantage of breakthrough technologies to enhance the customer experience.

At PolyAI, we’re committed to making cutting-edge conversational technologies accessible and beneficial to enterprises.

Thanks to Paweł Budzianowski for your input on this post.