We’ve recently been working with large contact centers to explore the best potential opportunities for conversational voice AI for their clients.

We focused on a large UK retailer that employs contact centers to handle calls about deliveries, refunds, existing order inquiries, and placing new orders.

We ruled out automating the process of placing new orders. We generally recommend first automating interactions that are high value to your customers, but low value to your business. In the case of a retailer, customer orders are one of – if not the – most valuable interactions the company can have with the customer and are currently best suited to either e-commerce or human-to-human sales.

Chasing deliveries, refunds, or asking questions about current orders are all great examples of interactions that are important to customers, but not so high value to the business. Of course, a retail company that offers poor service in these areas is likely to feel the burn, but these are not the core transactions that drive the business.

While each of these query-types is ripe for automation, we identified another interaction that spanned all queries around existing orders: customer identification and verification (ID&V). Each call regarding an existing order (which was the vast majority of calls), included around 30 seconds of ID&V where the customer was asked to confirm their order number, name and address for security purposes.

In this post, we’re going to explore the power of conversational voice AI and explain how PolyAI uses speech recognition and machine learning to handle ID&V across contact centers in any number of different industries.

ID&V with Artificial Intelligence

Using automatic speech recognition, customers can give their information without needing to hang around on hold to speak to a human. The problem with this, is that speech recognition is often really bad at understanding.

But are human beings really that much better? The kind of information you’re usually required to give during ID&V is the same sort of information that will have customers spelling out loud or dusting off their phonetic alphabet. What if AI could eliminate the need for this?

At PolyAI, we’ve created a solution that records not only what it thinks it’s heard, but all of the possible things it might have heard. It’s a bit like when someone says something and you don’t hear. Imagine if you had recorded it and could play it back over and over in your head until you realized what the other person meant, and it took you under a second to do.

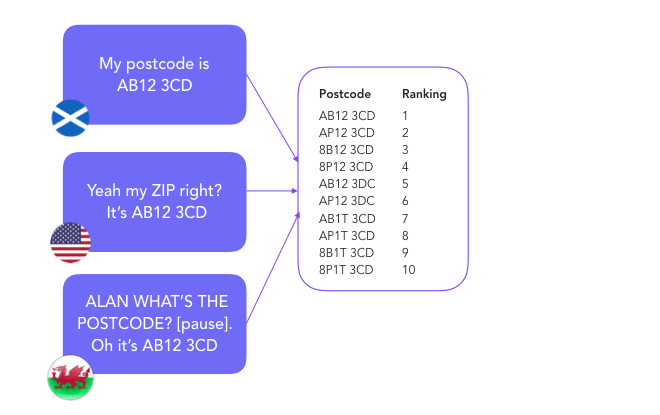

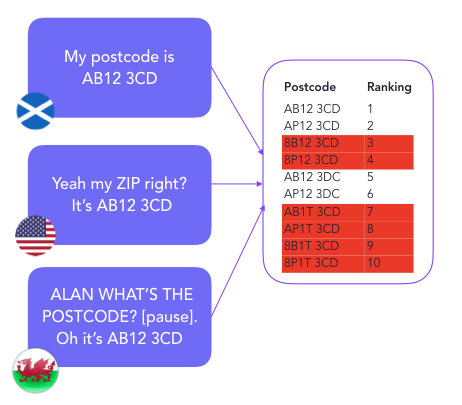

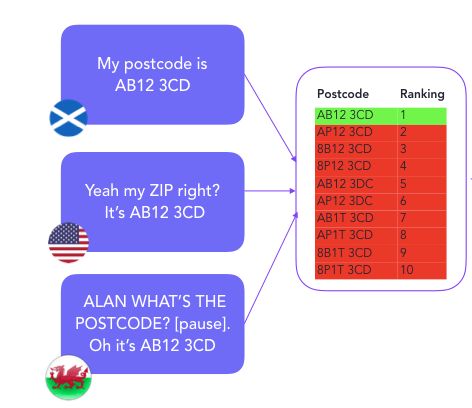

Because the system doesn’t just rely solely on speech recognition and can use context to understand information, it can handle different accents. The diagram below shows three different people giving their postcodes in different accents, and different words. The ID&V system ranks all possible postcodes in order of what it thinks it heard. Note that it records variations and ‘P’ and ‘B’, and ‘A’ and ‘8’ etc.

The system then checks the potential postcodes against a database of postcodes in the UK and eliminates anything that isn’t an actual postcode.

In this example, the system has been left with two possible postcodes. But that doesn’t mean it needs to fail. The customer will have also provided one or two other pieces of information (based on security level). The system can look at how well these pieces of information match the same customer and either prevent them from accessing the account or verify them for the next stage.

In this example, the system has narrowed postcode down to either:

- AB12 3CD or

- AP12 3DC

Both of these are the postcodes of real customers, according to the CRM, but only one (or none!) of them is going to be that of the person calling.

Let’s say the customer identifies themselves as John Smith.

The system may hear the name Joe Smith or Tom Smith, as well as John Smith.

If AB12 3CD is registered with John Smith and AP12 3DC is registered with Tom Smith, the system will need to ask the customer to repeat their information. This is exactly what would happen with a human agent who had misheard or mistyped a piece of information.

However, if AB12 3CD is registered with John Smith and AP12 3DC is registered with Kylie Whitehead, the system can safely verify John Smith.

If the customer states their name as Nikola Mrksic though, the system will hand off to a human agent for further investigation.

Humans & AI working together

In this example, conversational voice AI is able to get the required information faster than a human agent would and is twice as likely as a human agent to get ID&V right the first time.

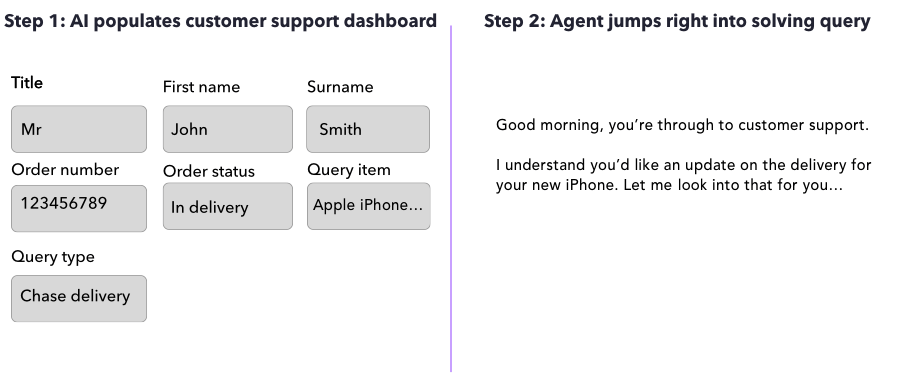

But the next step in this flow – as we explored it for the retail client – was agent handoff, where the agent would see the information they need to solve the query immediately and not see any of the customer’s private information unless necessary.

This means the agent can jump directly into solving the customer query, not only saving them both time, and keeping customers’ personal information from being stored, but providing both customer and agent with a more satisfying interaction.e

Learn more about call center identity verification or if you’d like to discuss the possibility of using conversational voice AI in contact centers, get in touch with PolyAI today.