Two of the main barriers holding companies back from embracing conversational AI and voicebots are cost and previous bad experiences.

More often than not, these two barriers are linked. Conversational AI companies have previously over-promised and under-delivered, thanks to complex technological approaches that are costly and unsustainable.

In this post, we’ll explain how most conversational AI companies approach building voicebots, and why this often results in poor experiences that businesses end up paying over the odds for. Then we’ll show you some of the PolyAI secret sauce that helps us create cost-effective, scalable voicebots, that can be updated in minutes.

The traditional approach

Traditionally, conversational agents are programmed to listen out for particular intents – tasks or actions that define the end-user’s intention for a single conversational turn. The scope of the build will outline what information the bot needs to understand and/or collect, and engineers will build a solution accordingly.

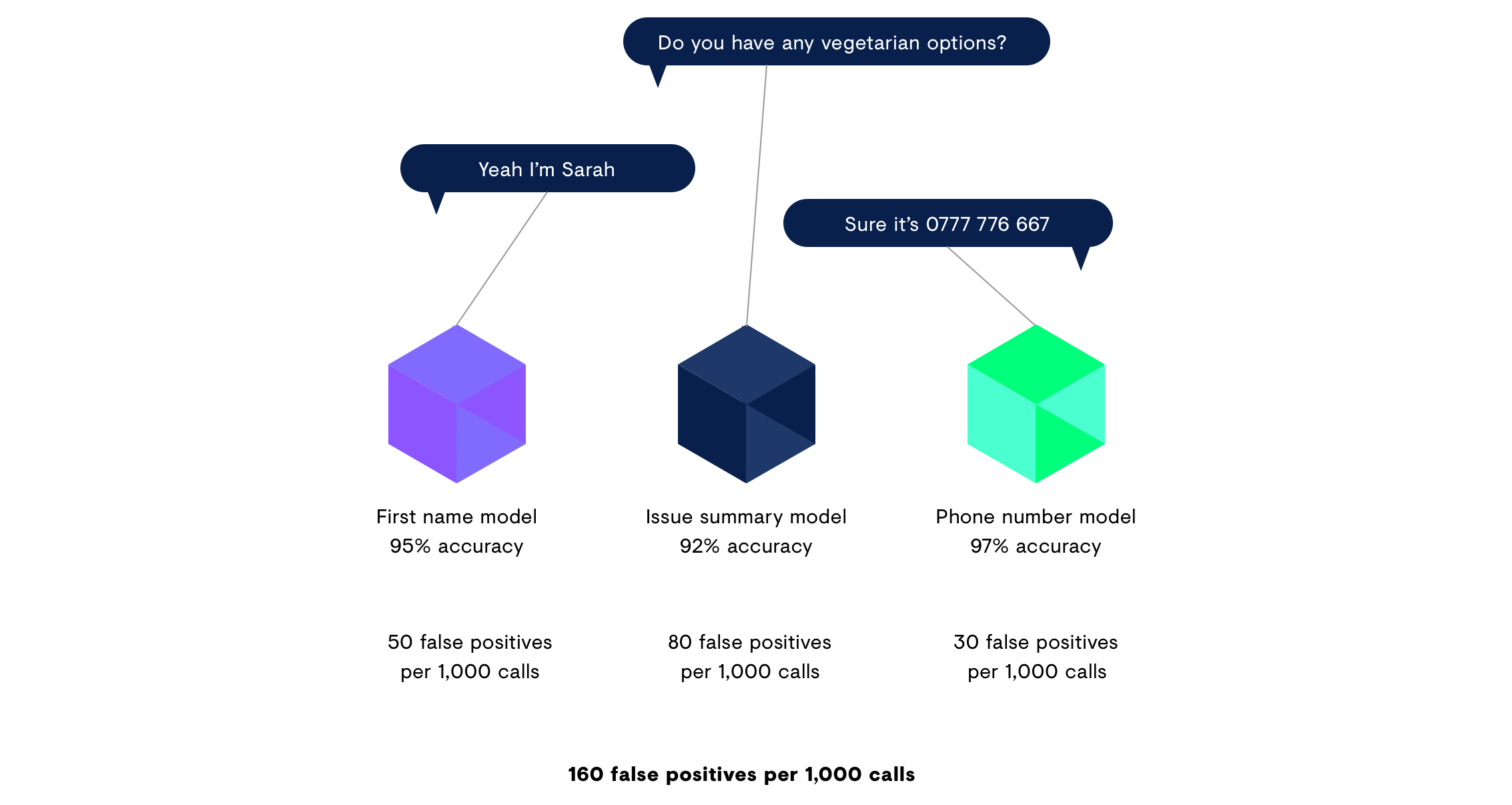

The most common approach – used by most conversational AI companies – is to create a separate neural network model for each intent the bot is required to identify.

Let’s say you want a virtual agent to take a few details to answer questions about a restaurant, and record the caller’s name and phone number.

Most conversational AI companies will create three individual models: one to listen out for name, one to listen out for phone number, and one to listen out for each possible question a customer might ask.

This is the standard approach taken to creating conversational voicebots, and it works. But it brings up a lot of problems that take a lot of time and money to solve.

Firstly, no model is going to be 100% accurate. Just like human beings, voicebots will not understand customers first time, every time. This is simply the nature of communication, but also of artificial intelligence.

Even in the highly unlikely scenario where each of the individual models is working at 96% accuracy (bear in mind that 75% accuracy is common of most commercial conversational AI solutions), this is only going to be diluted by multiple models working together.

The second issue is that several models working together, but listening out for different things is very likely to cause confusion.

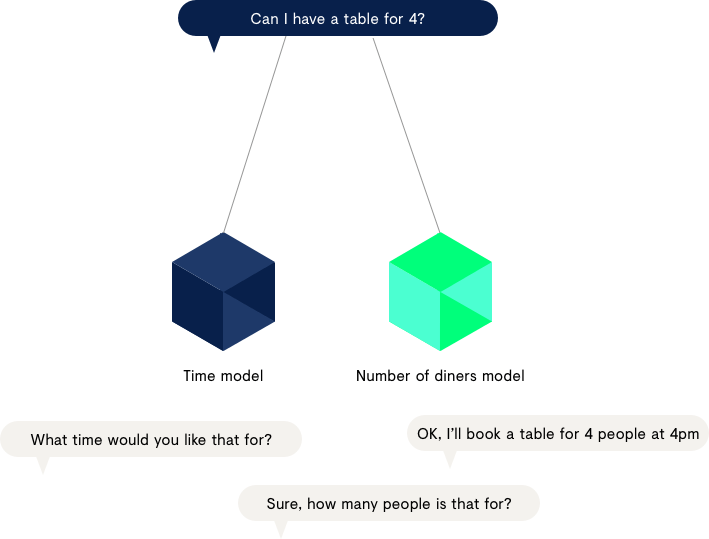

Let’s say your bot is taking a reservation at a restaurant. The customer might ask for ‘a table for 4’.

This bot will have a model listening out for time and another model listening out for the number of people the reservation is for. In this example, each model will understand ‘4’ as the information it is looking out for.

Because this correlating information has been picked up by 2 models, there will be 2 possible answers the bot could give. Unless programmed otherwise, the bot will need to pick one of these responses at random. In order to prevent this, the engineer will need to pre-empt every single instance where correlating information could cause confusion, and program in the desirable behaviour.

Thirdly, this approach will require a significant amount of work when you want to add or update information. Let’s say you want to add some information about your smoking policy. First, your engineers will need to create a model to listen out for the ‘smoking’ intent. But you might find that this new information correlates with some other information in another model, such as ‘we offer a smoked salmon starter.’ This means the engineer will need to program both the ‘smoking’ and the ‘menu’ models to give a specific answer to a specific query. There may be a model listening out for dietary requirements (pescatarian, vegetarian etc) that could be affected, or even a model listening out for safety concerns (smoke detectors) that will also need to programmed.

One model to rule them all

At PolyAI we do things differently.

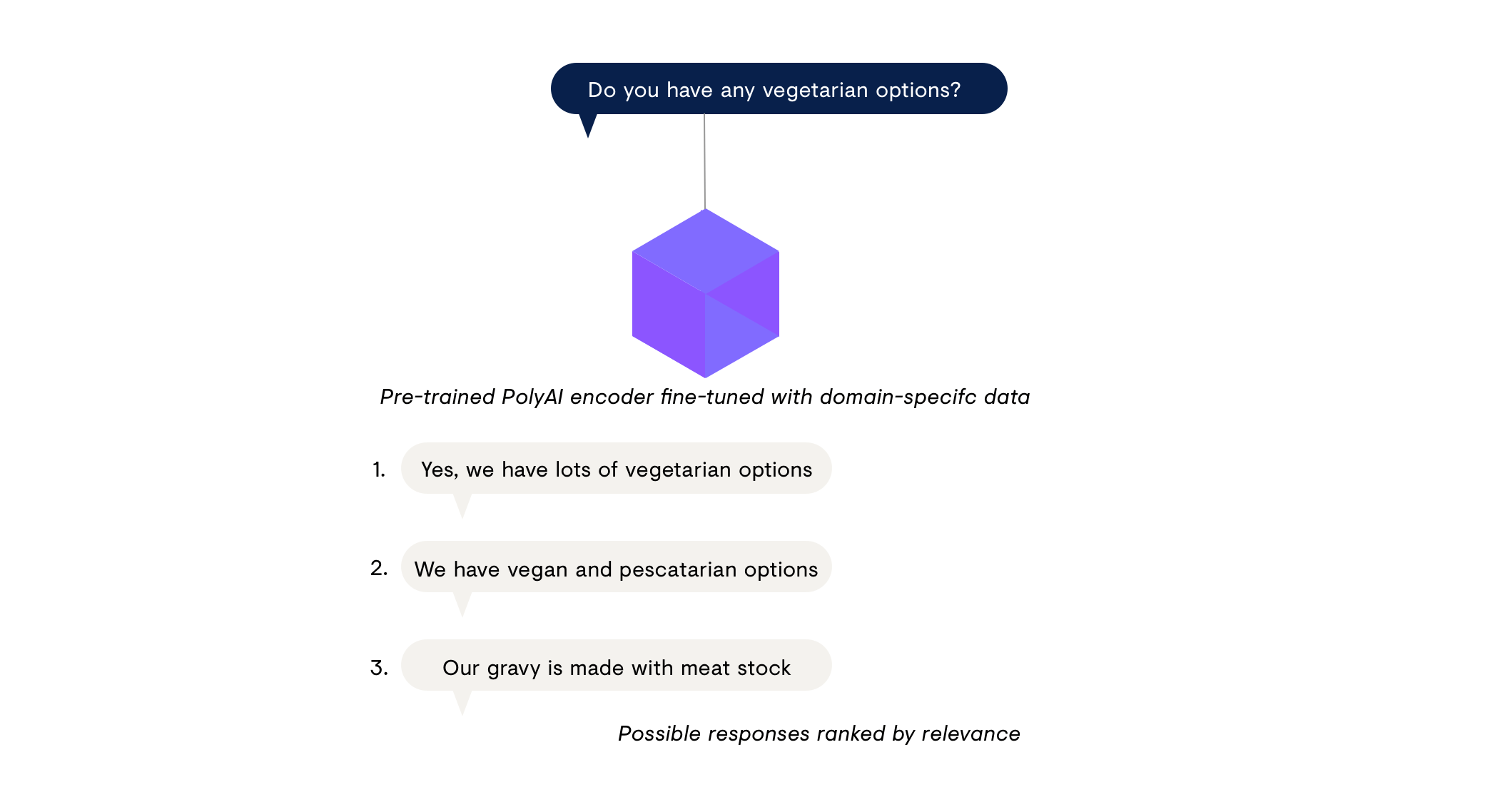

Firstly, we only use one pre-trained model to understand intent. Our Encoder model is the most accurate on the market, understanding what customers want, regardless of the language they use.

Secondly, our model is not programmed to listen out for particular intents. Our Encoder model finds all of the possible responses to a query, ranks them in order of relevance, and delivers the top ranking response to the end-user.

This means that the model can give responses it hasn’t been programmed to give, as long as it has the relevant information. Whereas traditional conversational AI companies would need to create a model to listen out for dietary requirement intents for example, our pre-trained Encoder model can understand questions around specific diets, scan the menus it has access to, and tell the user that there are options to suit them.

Instead of needing to update a number of models with new content, here at PolyAI, we simply need to update the content itself and let the model do the rest.

This approach means we can update voicebot knowledge in minutes, opening up transient content like ‘dish of the day’ or sale prices for automation.

If you’d like to learn more about building a voicebot for your business, get in touch with PolyAI today.